Data modeling

What Is a Data Model?

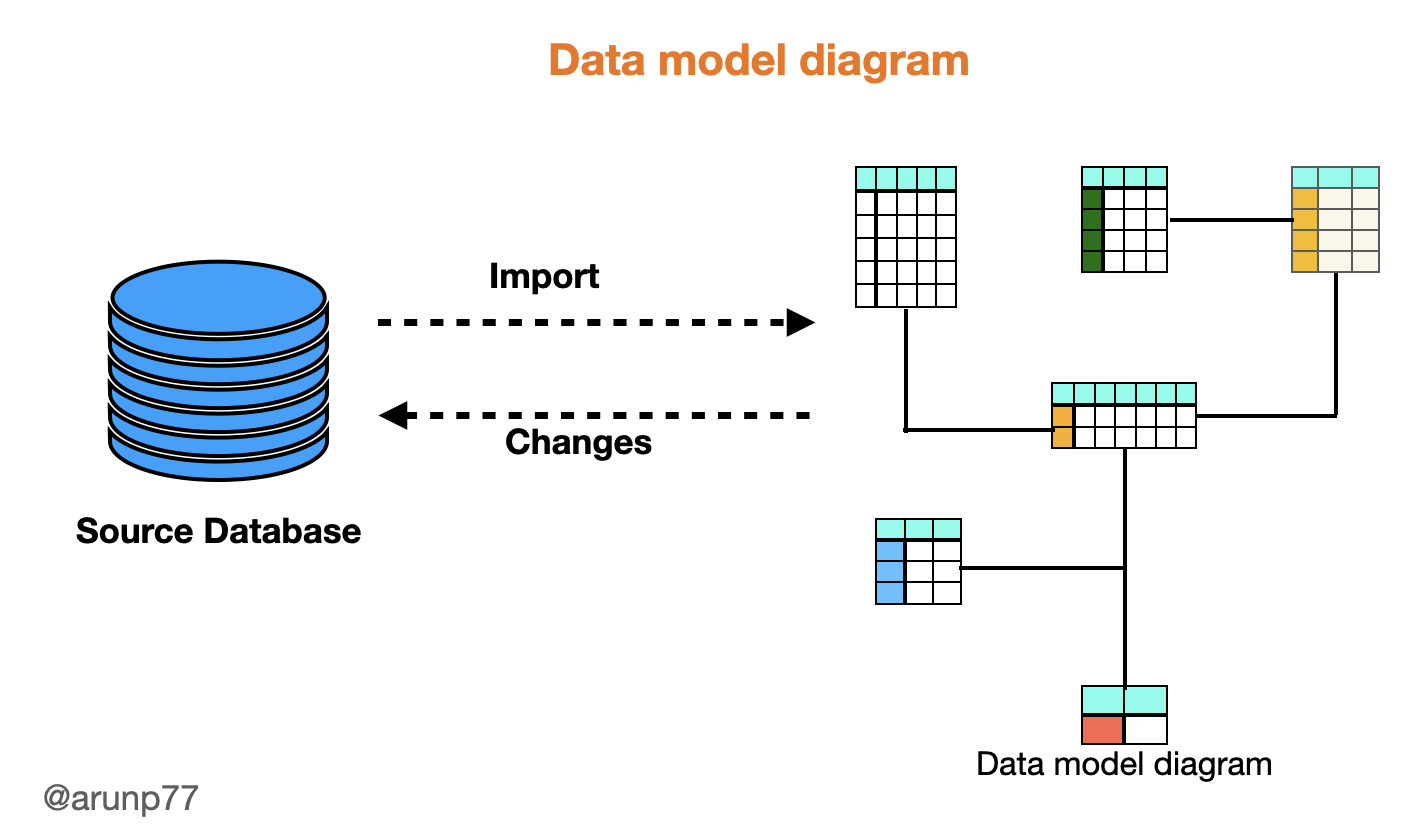

- A data model is a conceptual representation of data that defines the structure, relationships, and constraints of data elements within a system or organization. It serves as a blueprint for how data is organized and stored, allowing for efficient data management, retrieval, and analysis.

- Data models are visual representations of an enterprise’s data elements and the connections between them. By helping to define and structure data in the context of relevant business processes, models support the development of effective information systems. They enable business and technical resources to collaboratively decide how data will be stored, accessed, shared, updated and leveraged across an organization.

What is the purpose of a data model?

Data models play a key role in bringing together all segments of an enterprise – IT, business analysts, management and others – to cooperatively design information systems (and the databases they rely on). These systems require properly defined and formatted data, and models shine a clear light on what data is required and how it must be structured to support the desired business processes. By explicitly determining the structure of your data, these models support a variety of use cases, including database modeling, information system design, and process development in support of a consistent, clean exchange of data. It’s also important to understand the three different types of data models. Each will serve a different purpose as you work through the data modeling process. The purposes of a data model are as follows:

- Structural Clarity: It provides a clear and organized structure for data, defining how data entities (e.g., customers, products) are related to each other and how attributes (e.g., names, IDs) are organized.

- Data Integrity: Data models enforce constraints and rules that ensure data accuracy and consistency. This helps in preventing data anomalies and errors.

- Data Management: Data models assist in data storage, retrieval, and management. They define how data is stored in databases, making it easier to work with data efficiently.

- Communication: Data models act as a common language between business stakeholders, analysts, and developers, facilitating clear communication about data requirements and structures.

- Analysis and Reporting: They enable data analysts and scientists to perform complex queries, analytics, and reporting, as they understand how data is organized.

- Database Design: Data models are used in the design of databases and data warehouses, helping in the creation of tables, indexes, and relationships.

Types of data models

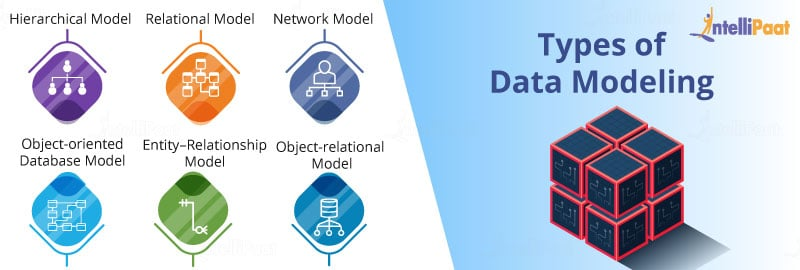

There are several types of data models, including:

- Conceptual Data Model: This provides a high-level (big-picture view), abstract view of the data in an organization, focusing on entities and their relationships without delving into technical details.

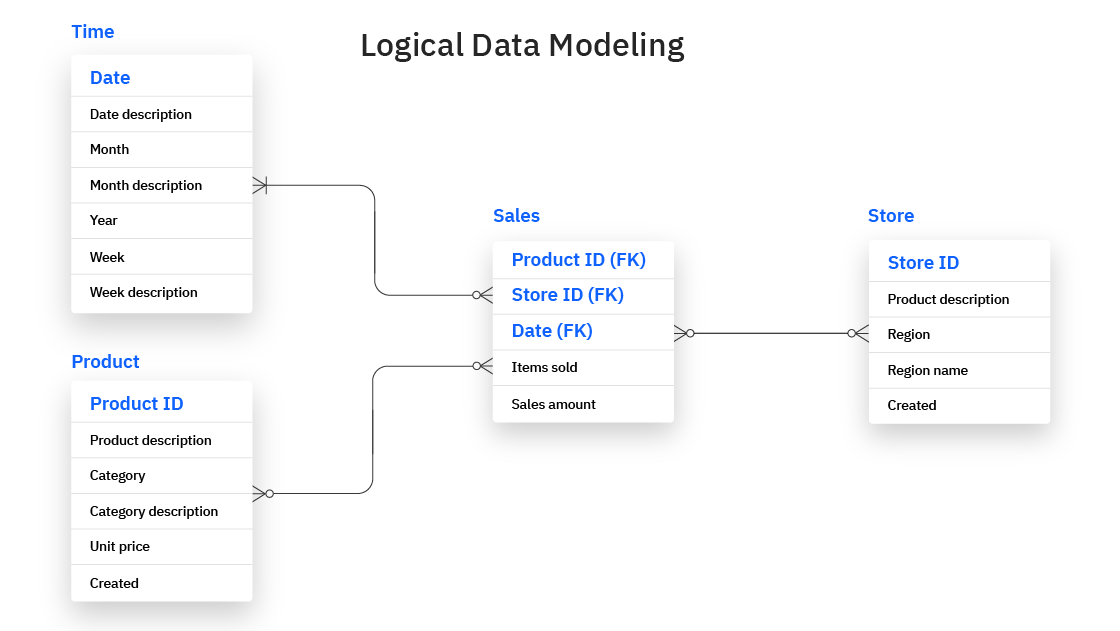

- Logical Data Model: It defines data structures in a more detailed manner, including entities, attributes, and their relationships. It is technology-independent and focuses on what needs to be stored.

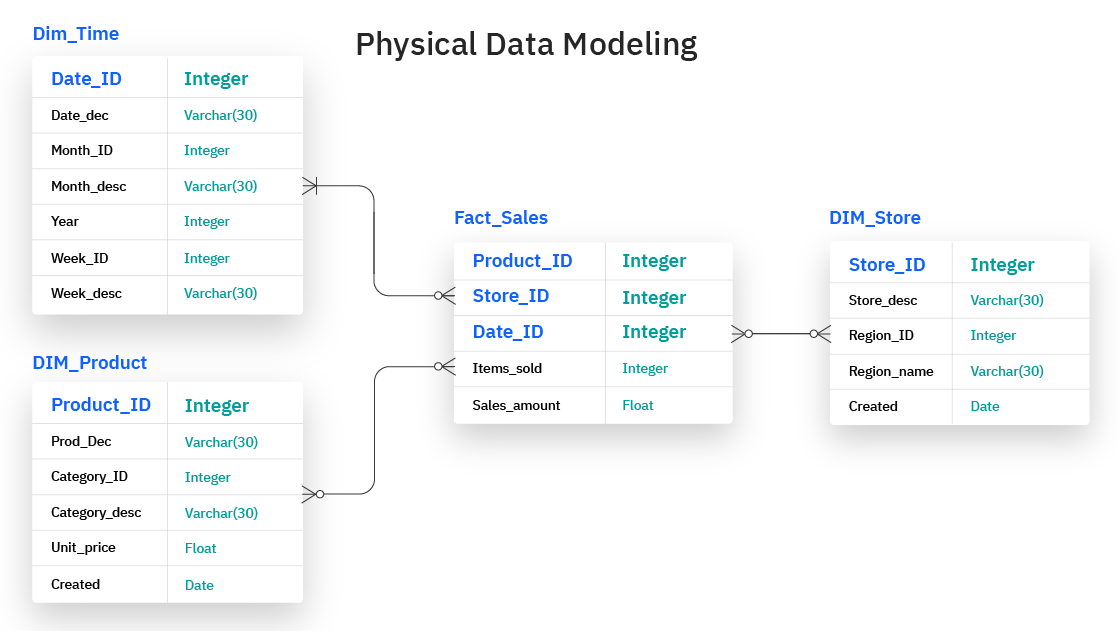

- Physical Data Model: They provide a schema for how the data will be physically stored within a database. As such, they’re the least abstract of all. They offer a finalized design that can be implemented as a relational database, including associative tables that illustrate the relationships among entities as well as the primary keys and foreign keys that will be used to maintain those relationships. Physical data models can include database management system (DBMS)-specific properties, including performance tuning.

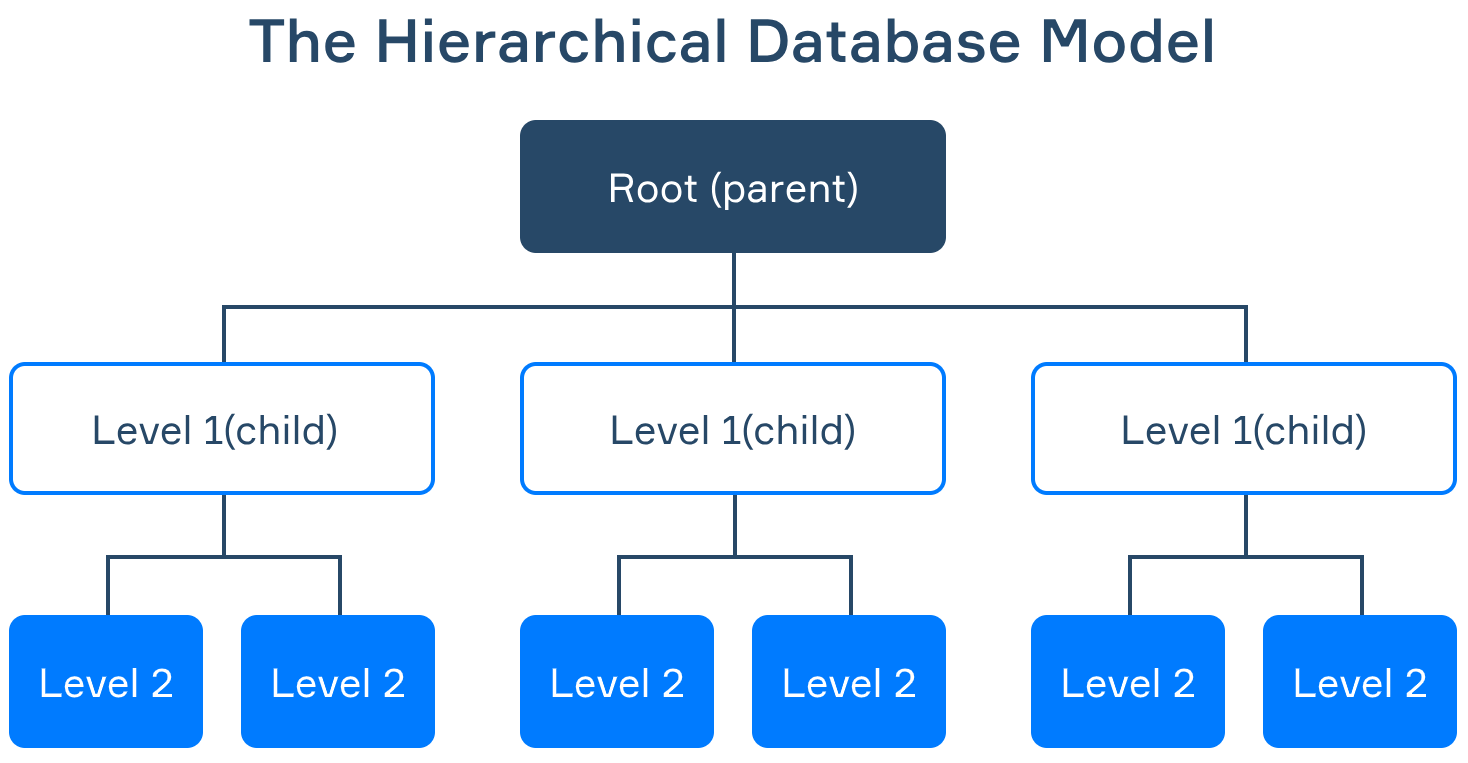

- Hierarchical Data Model: It organizes data in a tree-like structure with parent-child relationships, where each parent can have multiple children but each child can have only one parent.

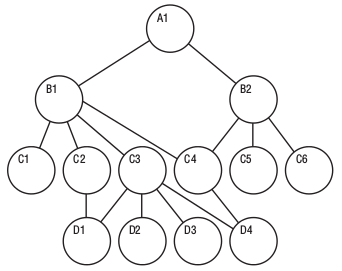

- Network Data Model: It extends the hierarchical model by allowing multiple relationships between records, providing more flexibility. Instead of only allowing each child to have one parent, this model allows each child to have multiple parents (it calls the children members and the parents owners). It addresses the need to model more complex relationships such as the orders/parts many-to-many relationship mentioned in the hierarchical article. In following figure, A1 has two members, B1 and B2. B1. is the owner of C1, C2, C3 and C4. However, in this model, C4 has two owners, B1 and B2.

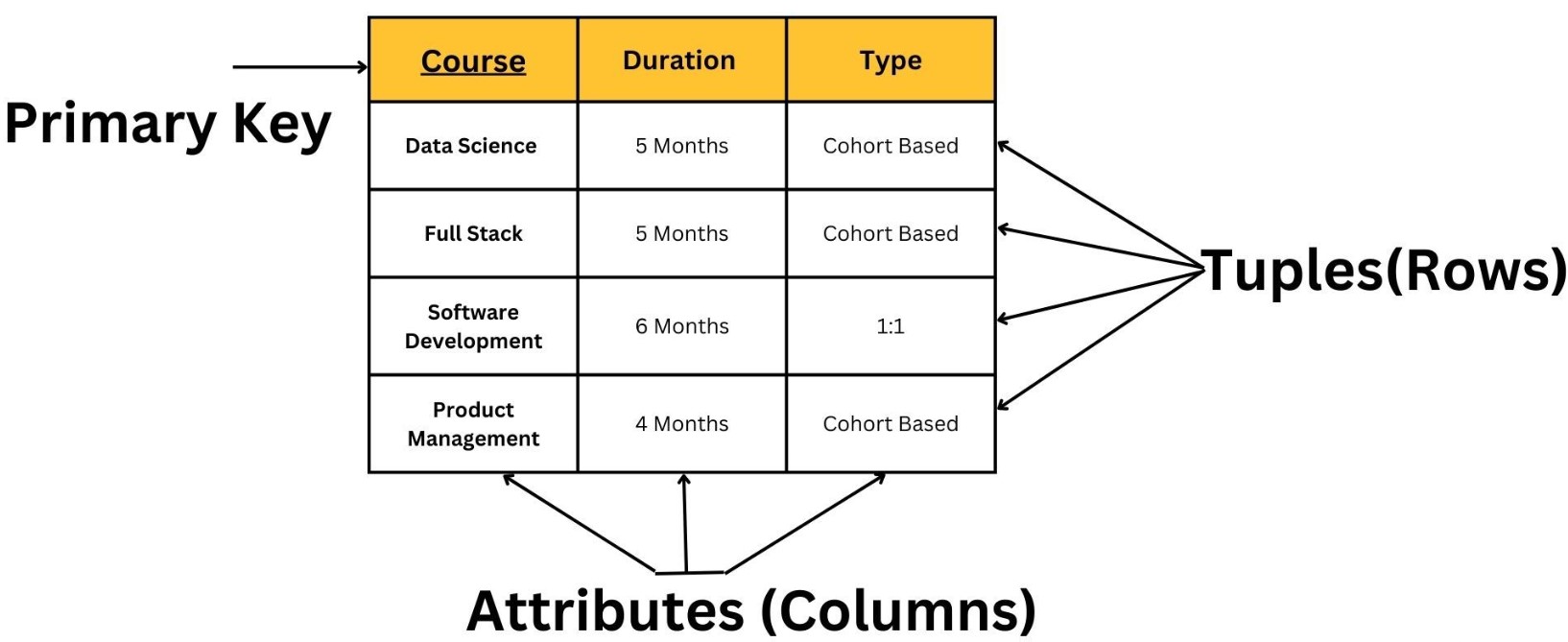

- Relational Data Model: It represents data as tables with rows and columns, where each table has a primary key and can be related to other tables through foreign keys. It's the foundation for relational databases like MySQL and PostgreSQL.

- Entity-Relationship Model (ERD): Entity relationship diagram describes the structure of the database. The entity relationship diagram shows entities (tables in relational databases) and relationships between tables that exist in the database. For a good database design, it is very important to have an entity relationship diagram. In an entity relationship diagram, the basic elements are:

- Entities (tables)

- Attributes (table columns)

- Relationships (relationships between tables)

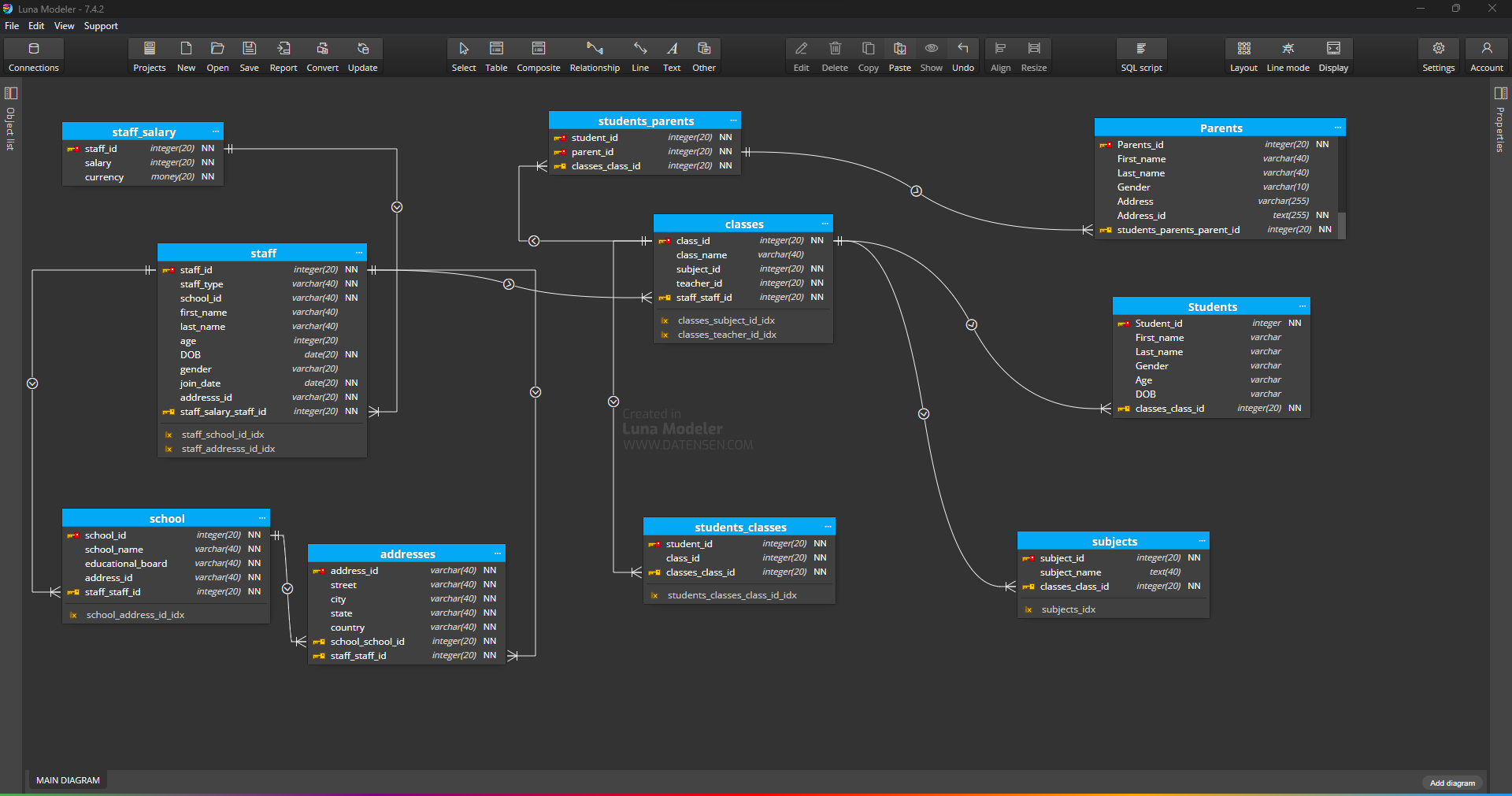

Image description: This image showcases my data modeling work at Luna data modeler. In this project, I created a comprehensive school data model, encompassing various critical elements such as class data, staff members, staff salaries, school details, addresses, parent information, student profiles, and subject details. This model serves as the foundation for organizing and managing crucial data related to the school environment. If you believe additional information would be beneficial, please feel free to suggest any specific details you'd like me to include.

Data Modeling Tools

- Luna modeler: Luna is a data modeling tool that is specifically designed for relational databases. It is a desktop application that is available for Windows, macOS, and Linux.

- ER/Studio: ER/Studio is a powerful data modeling tool that supports a variety of data models, including relational, hierarchical, network, and graph data models. ER/Studio also provides a variety of features to help you with data modeling, such as automatic entity discovery, reverse engineering, forward engineering, and validation.

- DbSchema Pro: DbSchema Pro is a free and open-source data modeling tool that is easy to use and supports a variety of database platforms. DbSchema Pro provides a variety of features to help you with data modeling, such as visual data modeling, schema synchronization, and database reporting.

- Erwin Data Modeler: Erwin Data Modeler is a popular data modeling tool that is known for its ease of use and powerful features. Erwin Data Modeler supports a variety of data models, including relational, hierarchical, network, and graph data models. Erwin Data Modeler also provides a variety of features to help you with data modeling, such as automatic entity discovery, reverse engineering, forward engineering, and validation.

- Archi: Archi is a data modeling tool that is specifically designed for enterprise architects. Archi supports a variety of data models, including relational, hierarchical, network, and graph data models. Archi also provides a variety of features to help you with data modeling, such as business process modeling, enterprise architecture modeling, and impact analysis.

- SQL Database Modeler: SQL Database Modeler is a free and open-source data modeling tool that is designed to help you create, edit, and document database models. SQL Database Modeler supports a variety of database platforms and provides a variety of features to help you with data modeling, such as visual data modeling, schema synchronization, and database reporting.

- Lucidchart: Lucidchart is a popular online diagramming tool that can also be used for data modeling. Lucidchart provides a variety of data modeling templates and symbols, as well as a variety of features to help you with data modeling, such as collaboration and real-time editing.

- PgModeler: PgModeler is a free and open-source data modeling tool that is specifically designed for PostgreSQL databases. PgModeler provides a variety of features to help you with data modeling, such as visual data modeling, schema synchronization, and database reporting.

- IBM InfoSphere Data Architect: IBM InfoSphere Data Architect is a powerful data modeling tool that is designed for enterprise businesses. IBM InfoSphere Data Architect supports a variety of data models, including relational, hierarchical, network, and graph data models. IBM InfoSphere Data Architect also provides a variety of features to help you with data modeling, such as automatic entity discovery, reverse engineering, forward engineering, and validation.

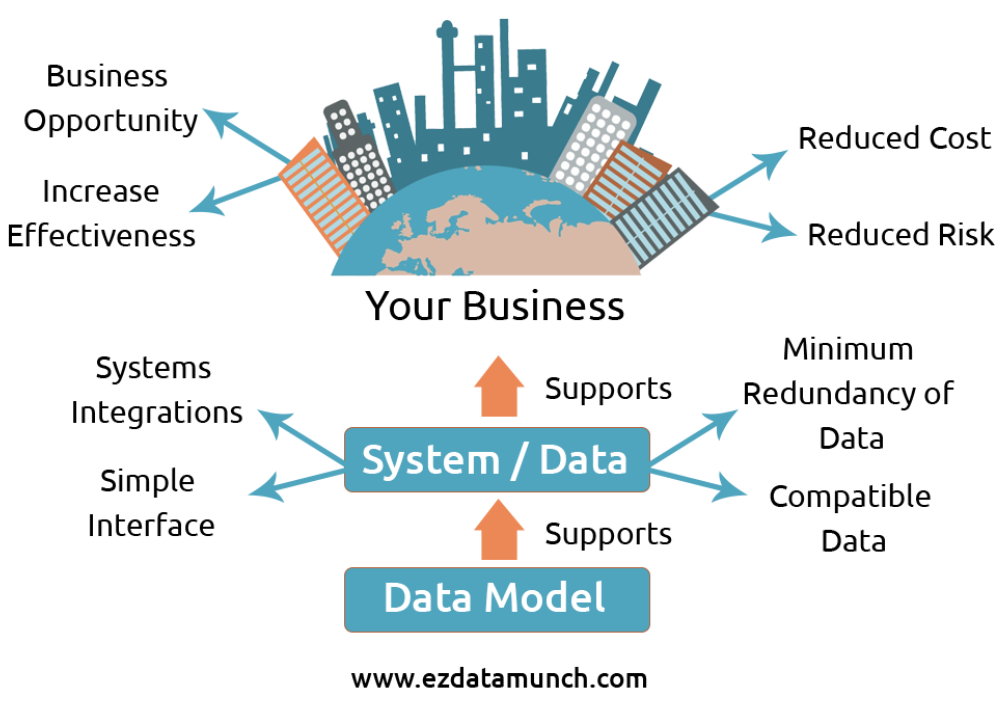

Benefits of data modeling

Data modeling makes it easier for developers, data architects, business analysts, and other stakeholders to view and understand relationships among the data in a database or data warehouse. In addition, it can:

- Reduce errors in software and database development.

- Increase consistency in documentation and system design across the enterprise.

- Improve application and database performance.

- Ease data mapping throughout the organization.

- Improve communication between developers and business intelligence teams.

- Ease and speed the process of database design at the conceptual, logical and physical levels.

Data modeling process

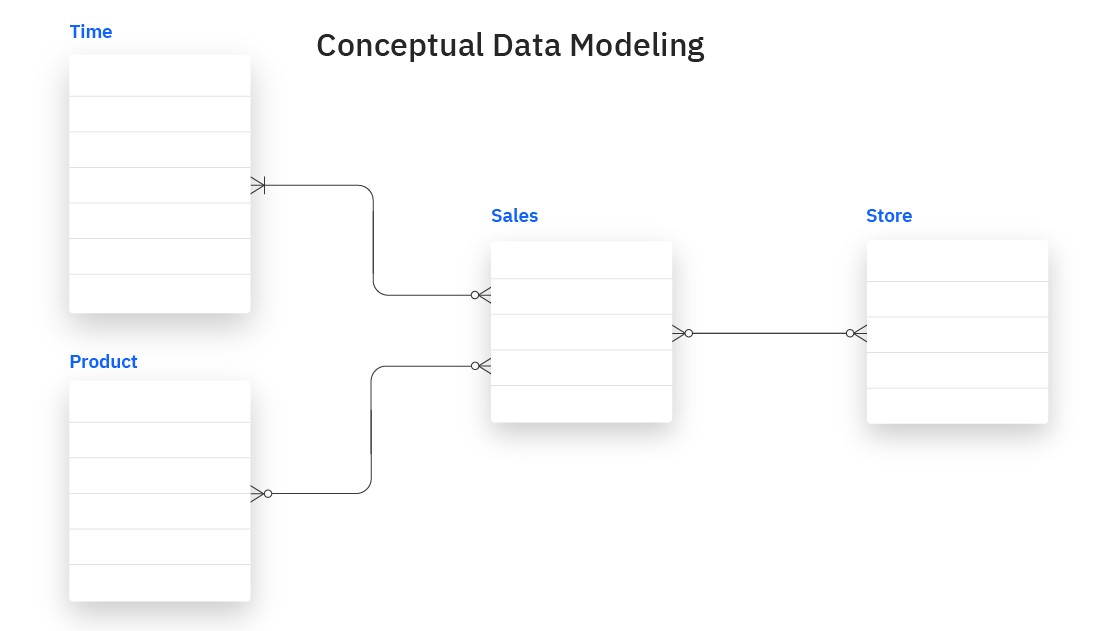

The data modeling process involves designing and creating a conceptual representation of how data should be organized, structured, and stored in a database system. It typically consists of the following steps:

- Identify Requirements: Understand the requirements of the system or application for which the data model is being developed. This involves gathering information from stakeholders, business analysts, and subject matter experts to determine the entities, attributes, relationships, and constraints that need to be represented.

- Conceptual Data Model: Create a conceptual data model that represents the high-level entities, their attributes, and the relationships between them. The conceptual model focuses on the business or domain concepts and provides an abstract view of the data requirements. Common techniques for creating conceptual models include entity-relationship (ER) diagrams or object-oriented models.

- Normalize the Model (Optional):If working with a relational database, the conceptual model can be normalized to eliminate redundancies and improve data integrity. This step involves applying normalization rules to ensure data is organized efficiently and avoid data anomalies. Normalization involves decomposing entities and defining primary keys, foreign keys, and relationships.

- Logical Data Model: Transform the conceptual model into a logical data model. The logical model represents the data structures and relationships using a specific notation or language. For relational databases, this often involves creating tables, defining columns, specifying data types, and establishing relationships using primary and foreign keys. In other database models, such as document-oriented or graph databases, the logical model may have different representations.

- Validate and Refine: Review and validate the logical data model to ensure it accurately represents the requirements. Collaborate with stakeholders to verify the model's correctness and completeness. Make refinements and adjustments as necessary to address any inconsistencies or issues identified during the validation process.

- Physical Data Model: Convert the logical data model into a physical data model that considers the specific database management system (DBMS) being used. This step involves translating the logical model into the technical implementation details, including specific database structures, indexes, storage considerations, and optimization techniques. The physical data model reflects the technical specifications required for data storage and retrieval.

- Implement and Deploy: Once the physical data model is finalized, it serves as the blueprint for implementing the database schema in the chosen DBMS. Create the necessary database objects (tables, indexes, views, etc.) based on the physical model. Load the data into the database and ensure that it aligns with the defined model.

Some other interesting things to know:

- Visit the Data mining tutorial

- Visit my repository on GitHub for Bigdata, Databases, DBMS, Data modling, Data mining.

- Visit my website on SQL.

- Visit my website on PostgreSQL.

- Visit my website on Slowly changing variables.

- Visit my website on SNowflake.

- Visit my website on SQL project in postgresql.

- Visit my website on Snowflake data streaming.