Machine learning: Reinforcement Learning

Introduction

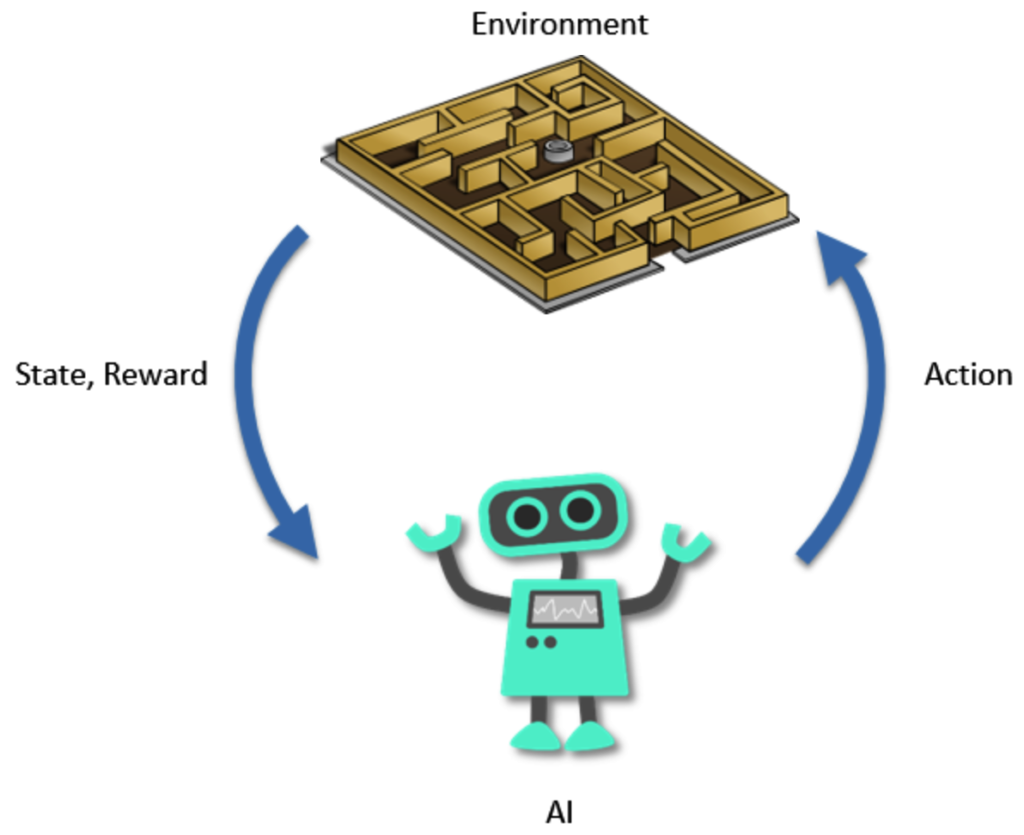

Reinforcement Learning (RL) is a subfield of machine learning where an agent learns to make decisions by interacting with an environment. The core idea is that the agent observes the state of the environment, takes actions, and receives feedback in the form of rewards. The goal is to learn a policy that maximizes cumulative reward over time.

Core Components of Reinforcement Learning:

- Agent: The learner or decision-maker.

- Environment: Everything the agent interacts with.

- State (S): A representation of the current situation.

- Action (A): All possible moves the agent can take.

- Reward (R): A scalar feedback signal; guides the agent.

- Policy (π): Strategy used by the agent to decide actions.

- Value Function (V): Predicts future rewards.

- Q-Function (Q): Predicts future rewards for action-state pairs.

- Model (optional): Predicts the next state and reward.

Types of Reinforcement Learning

- Model-Free RL: The agent learns directly from interactions.

- Model-Based RL: The agent builds a model of the environment and plans.

Main Categories of RL Algorithms

- Value-Based Methods: The agent learns the value of states or state-action pairs.

- Q-Learning

- Deep Q-Network (DQN)

- Double DQN

- Dueling DQN

- Distributional DQN

- Rainbow DQN (combines several DQN improvements)

- SARSA (State-Action-Reward-State-Action)

- Policy-Based Methods: The agent directly learns the policy.

- REINFORCE (Monte Carlo Policy Gradient)

- Policy Gradient (PG)

- Stochastic Policy Gradient

- Deterministic Policy Gradient

- Actor-Critic Methods: Combines value-based and policy-based methods.

- A2C (Advantage Actor-Critic)

- A3C (Asynchronous Advantage Actor-Critic)

- Deep Deterministic Policy Gradient (DDPG)

- Twin Delayed DDPG (TD3)

- Soft Actor-Critic (SAC)

- Proximal Policy Optimization (PPO)

- Trust Region Policy Optimization (TRPO)

Specialized & Advanced RL Approaches:

- Multi-Agent Reinforcement Learning (MARL): Multiple agents interact and learn.

- Hierarchical Reinforcement Learning (HRL): Breaks tasks into subtasks.

- Inverse Reinforcement Learning (IRL): Learns the reward function from expert behavior.

- Offline Reinforcement Learning: Learning from fixed datasets without active interaction.

- Meta-Reinforcement Learning: RL algorithms that adapt to new tasks quickly.

- Imitation Learning: Learns from observing expert demonstrations.

Summary

| Method Category | Popular Algorithms |

|---|---|

| Value-Based | Q-Learning, DQN, SARSA |

| Policy-Based | REINFORCE, Policy Gradient |

| Actor-Critic | A2C, A3C, PPO, DDPG, SAC, TD3 |

| Advanced RL | HRL, MARL, IRL, Meta-RL |

References

- My github Repositories on Remote sensing Machine learning

Some other interesting things to know:

- Visit my website on For Data, Big Data, Data-modeling, Datawarehouse, SQL, cloud-compute.

- Visit my website on Data engineering