Activation function

Introduction

Activation functions are a fundamental component in the architecture of neural networks. They introduce non-linearity into the network, enabling it to learn and perform more complex tasks. Without activation functions, a neural network would simply be a linear regression model, incapable of handling the intricacies of real-world data.What is an Activation Function?

An activation function defines the output of a neuron given an input or set of inputs. It is a crucial part of neural networks, allowing them to model complex data and learn from it. Activation functions determine whether a neuron should be activated or not, based on the weighted sum of inputs received and a bias.Types of Activation Functions

There are several types of activation functions used in deep learning, each with its own advantages and disadvantages. The most commonly used activation functions include:- Linear Activation Function

- Non-Linear Activation Functions:

- Sigmoid

- Hyperbolic Tangent (Tanh)

- Rectified Linear Unit (ReLU)

- Leaky ReLU

- Parametric ReLU (PReLU)

- Exponential Linear Unit (ELU)

- Swish

- Softmax

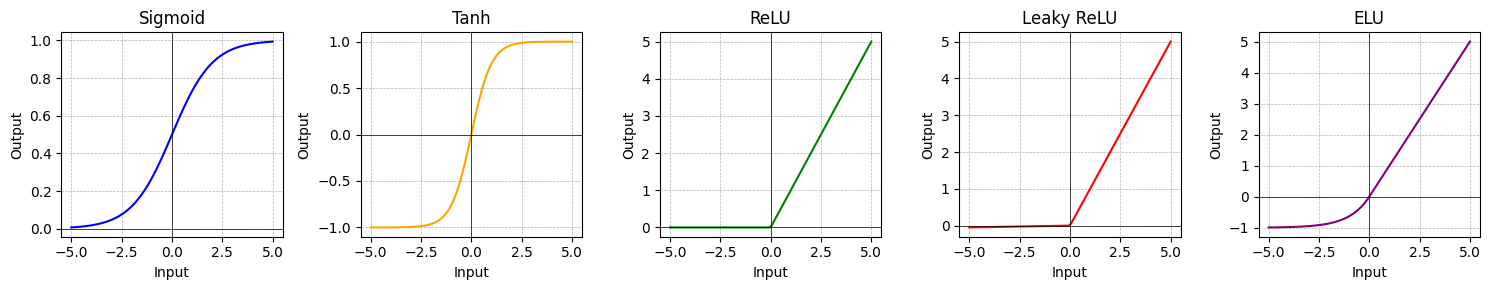

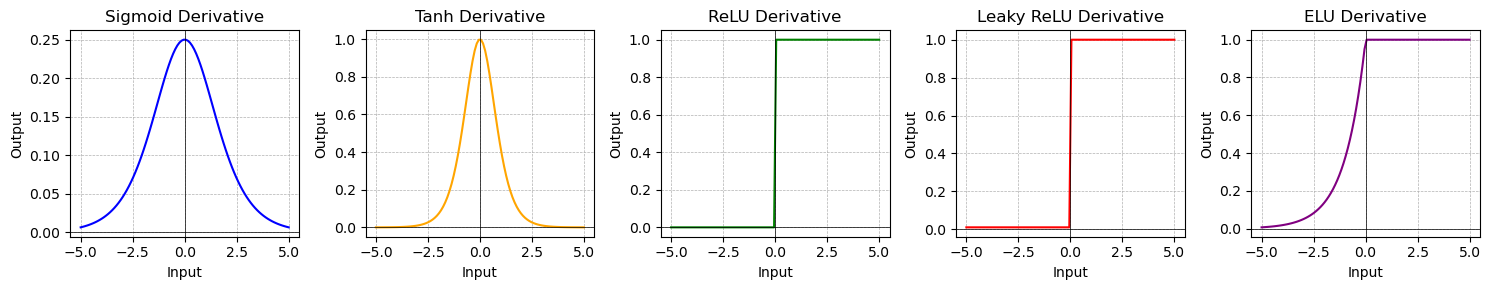

- Sigmoid Function:

- Formula: $$\sigma(x) = \frac{1}{1+ e^{-x}}$$

- Range: (0, 1)

- Description: Sigmoid function squashes the input values to a range between 0 and 1. It is useful in binary classification tasks where the output needs to be interpreted as probabilities.

- Shortcomings:

- Vanishing Gradient: Sigmoid functions saturate for large positive or negative inputs, leading to vanishing gradients during backpropagation, which can slow down or hinder learning, especially in deep networks.

- Output Range: The output of the sigmoid function is not centered around zero, which may result in unstable gradients and slower convergence when used in deep networks.

- Hyperbolic Tangent (Tanh) Function:

- Formula: $$\text{tanh}(x) = \frac{e^x - e^{-x}}{e^x + e^{-x}}$$

- Range: (-1,1)

- Description: Tanh function squashes the input values to a range between -1 and 1, making it suitable for classification tasks where the output needs to be centered around zero. Tanh functions are commonly used in hidden layers of neural networks, especially in recurrent neural networks (RNNs), to capture non-linearities and maintain gradients within a centered range.

- Shortcomings:

- Vanishing Gradient: Similar to the sigmoid function, tanh functions also suffer from the vanishing gradient problem for large inputs, particularly in deep networks.

- Saturation: Tanh functions saturate for large inputs, leading to slower convergence and potentially unstable gradients.

- Rectified Linear Unit (ReLU):

- Formula: $$f(x) = \text{max}(0,x)$$

- Range: \([0, +∞]\)

- Description: ReLU function returns 0 for negative inputs and the input value for positive inputs. It is the most commonly used activation function in deep learning due to its simplicity and effectiveness. ReLU functions are widely used in deep learning due to their simplicity and effectiveness. They allow for faster convergence and are less prone to vanishing gradients compared to sigmoid and tanh functions.

- Shortcomings:

- Dying ReLU: ReLU neurons can become inactive (or "die") for negative inputs during training, leading to dead neurons and a sparse representation of the input space. This issue is addressed by variants such as Leaky ReLU and Parametric ReLU.

- Unbounded Output: ReLU functions have an unbounded output for positive inputs, which may lead to exploding gradients during training, especially in deeper networks.

- Leaky ReLU:

- Formula:

$$f(x) = \begin{cases} x, & \text{if } x > 0 \\ \alpha x, & \text{otherwise} \end{cases}$$where \(\alpha\) is a small constant (\(<1\))

- Range: (-∞, +∞)

- Description: Leaky ReLU addresses the "dying ReLU" problem by allowing a small gradient for negative inputs, preventing neurons from becoming inactive.

- Shortcomings:

- Hyperparameter Tuning: Leaky ReLU introduces a hyperparameter (the leak coefficient) that needs to be manually tuned, which can be cumbersome and time-consuming.

- Formula:

- Exponential Linear Unit (ELU):

- Formula:

$$f(x) = \begin{cases} x, & \text{if } x > 0 \\ \alpha (e^x - 1), & \text{otherwise} \end{cases}$$where \(\alpha\) is a Hyperparameter.

- Range: (-∞, +∞)

- Description: ELU function smoothly handles negative inputs and can converge faster than ReLU, but it may be computationally more expensive. ELU functions smoothly handle negative inputs and can converge faster than ReLU. They have a mean activation closer to zero, which helps to alleviate the vanishing gradient problem.

- Shortcomings:

- Computational Cost: ELU functions involve exponential operations, which may be computationally more expensive compared to ReLU and its variants.

- Formula:

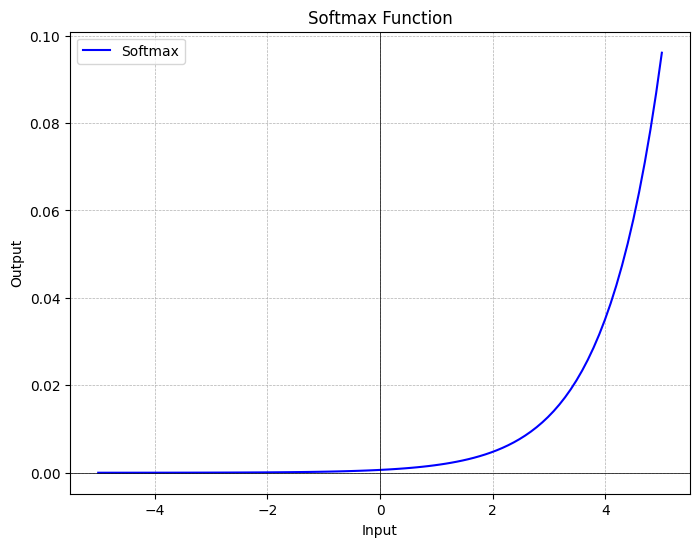

- Softmax Function:

- Formula: $$\text{softmax}(x_i) = \frac{e^{x_i}}{\sum_{j=1}^k e^{x_j}}$$ for \(i=1,2, ... k\) where \(k\) is the number of classes.

- Range: (0, 1) for each class, with all probabilities summing up to 1

- Description: Softmax function is commonly used in the output layer of a neural network for multi-class classification tasks. It converts raw scores into probabilities. Softmax functions are used in the output layer of neural networks for multi-class classification tasks. They convert raw scores into probabilities, enabling the model to make predictions across multiple classes.

- Shortcomings:

- Sensitivity to Outliers: Softmax functions are sensitive to outliers and large input values, which may affect the stability and reliability of the predicted probabilities.

These activation functions play a crucial role in the training and performance of neural networks by controlling the output of neurons and enabling the network to learn complex relationships in the data.

Choosing the Right Activation Function

Selecting the appropriate activation function for a neural network is a crucial decision that can significantly affect the model's performance. The choice depends on various factors such as the type of problem (classification or regression), the depth of the network, the need for computational efficiency, and the nature of the data. Here are some guidelines and considerations for choosing the right activation function:- ReLU and its variants (Leaky ReLU, PReLU): are widely used in hidden layers due to their efficiency and effectiveness in mitigating vanishing gradient problems.

- Sigmoid and Tanh: are often used in binary classification problems or in the output layer of certain types of networks.

- Softmax: is specifically used for multi-class classification problems in the output layer.

- Swish and ELU: can be considered for deeper networks where traditional activation functions might not perform well.

In summary, the choice of activation function depends on the specific requirements of the task, the architecture of the neural network, and empirical performance on the validation data. It is often beneficial to experiment with different activation functions and monitor the training dynamics and model performance to select the most suitable one for a given problem. Additionally, using advanced techniques such as batch normalization and adaptive learning rate methods can help mitigate some of the shortcomings associated with activation functions.

References

- My Github repo with all codes.

- What is NLP (natural language processing)? (IBM) Contributor: Jim Holdsworth).

- What are large language models (LLMs)? (IBM)

- What is generative AI?(IBM), Contributor: Cole Stryker, Mark Scapicchio

- Udemy Bootcamp on Machine learning, Deep Learning and NLP, by Krish Naik

Some other interesting things to know:

- Visit my website on For Data, Big Data, Data-modeling, Datawarehouse, SQL, cloud-compute.

- Visit my website on Data engineering